Softvelum team which operates WMSPanel reporting service continues analyzing the state of streaming protocols.

As the year of 2016 is over, it's time to make some summary of what we can see from looking back through this past period. The media servers connected to WMSPanel processed more than 34 billion connections from 3200+ media servers (operated by Nimble Streamer and Wowza). As you can tell we have some decent data to analyse.

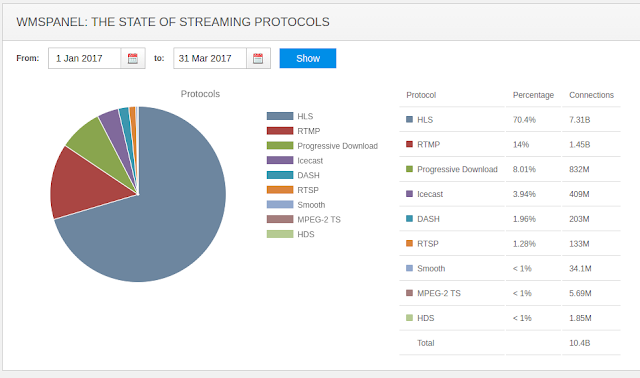

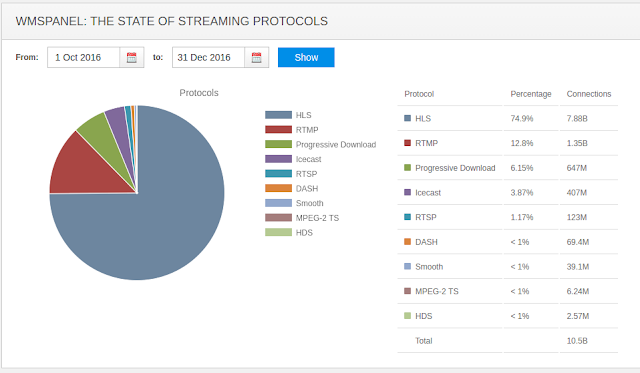

First, let's take a look at the chart and numbers:

As the year of 2016 is over, it's time to make some summary of what we can see from looking back through this past period. The media servers connected to WMSPanel processed more than 34 billion connections from 3200+ media servers (operated by Nimble Streamer and Wowza). As you can tell we have some decent data to analyse.

First, let's take a look at the chart and numbers:

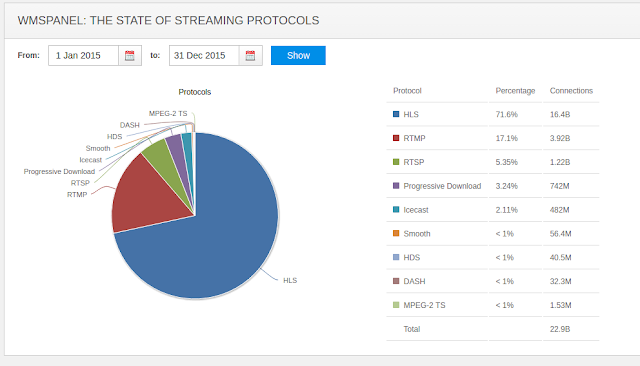

You can compare that to the picture of 2015 protocols landscape:

In the end of 2015 it had being collected from 2300+ servers.

What can we see?

If you see any trends which we haven't mentioned - please share your feedback with us!

We'll keep analyzing protocols to see the dynamics. Check our updates at Facebook, Twitter or Google+.

|

| The State of Streaming Protocols - 2015 |

What can we see?

- HLS is pretty stable at ~3/4 of all connections, its share is strong 73%. It's a de-facto streaming standard now for consuming content on end user devices.

- RTMP continues to go down - it's fallen to 10% from 17%. Low latency streaming still requires this protocol so it won't go away and will have its narrow niche.

- RTSP keeps playing same roles of real-time transmission protocol for real-time delivery but lack of players support will have it shrinking - like we see it now with 4%, less than last year.

- Progressive download has strengthen its position to get 7% of the views.

- Icecast streaming feature set was heavily improved in Nimble Streamer in 2016 so you can see its share growing to 3% of all connections. Online radios and music streaming services are popular so we do our best to help them bring music to the people.

- MPEG-DASH overcame HDS (which seem to fade away) and now competes with SmoothStreaming.

- MPEG-TS feature set was also improved to allow using it in more use cases, so we see its count to increase by nearly 10 times. It has its niche so we'll continue enhancing it.

If you see any trends which we haven't mentioned - please share your feedback with us!

We'll keep analyzing protocols to see the dynamics. Check our updates at Facebook, Twitter or Google+.